Create a cluster

Note

These instructions are for Unity Catalog enabled workspaces using the updated create cluster UI. To switch to the legacy create cluster UI, clickUI Previewat the top of the create cluster page and toggle the setting to off.

For documentation on the non-Unity Catalog legacy UI, seeConfigure clusters. For a comparison of the new and legacy cluster types, seeClusters UI changes and cluster access modes.

This article explains the configuration options available when you create and edit Databricks clusters. It focuses on creating and editing clusters using the UI. For other methods, seeClusters CLI,Clusters API 2.0, andDatabricks Terraform provider.

The cluster creation user interface lets you choose the cluster configuration specifics, including:

Theaccess mode, which controls the security features used when interacting with data

Access the cluster creation interface

To create a cluster using the user interface, you must be in the Data Science & Engineering or Machine Learning persona-based environment. Use thepersona switcherif necessary.

Then you can either:

Click

Computein the sidebar and thenCreate computeon the Compute page.

Computein the sidebar and thenCreate computeon the Compute page.ClickNew>Clusterin the sidebar.

Note

You can also use the DatabricksTerraformprovider tocreate a cluster.

Cluster policy

Cluster policiesare a set of rules used to limit the configuration options available to users when they create a cluster. Cluster policies have ACLs that regulate which specific users and groups have access to certain policies.

By default, all users have access to thePersonal Computepolicy, allowing them to create single-machine compute resources. If you don’t see the Personal Compute policy as an option when you create a cluster, then you haven’t been given access to the policy. Contact your administrator to request access to the Personal Compute policy or an appropriate equivalent policy.

To configure a cluster according to a policy, select a cluster policy from the政策dropdown.

What is cluster access mode?

Cluster access mode is a security feature that determines who can use a cluster and what data they can access via the cluster. When you create any cluster in Databricks, you must select an access mode.

Access Mode |

Visible to user |

UC Support |

Supported Languages |

Notes |

|---|---|---|---|---|

Single User |

Always |

Yes |

Python, SQL, Scala, R |

Can be assigned to and used by a single user. To read from a view, you must have |

Shared |

Always (Premium plan required) |

Yes |

Python (on Databricks Runtime 11.1 and above), SQL |

Can be used by multiple users with data isolation among users. Seeshared limitations. |

No Isolation Shared |

管理員可以隱藏這個cluster type byenforcing user isolationin the admin settings page. |

No |

Python, SQL, Scala, R |

There is arelated account-level setting for No Isolation Shared clusters. |

Custom |

Hidden (For all new clusters) |

No |

Python, SQL, Scala, R |

This option is shown only if you have existing clusters without a specified access mode. |

You can upgrade an existing cluster to meet the requirements of Unity Catalog by setting its cluster access mode toSingle UserorShared. There are additional access mode limitations for Structured Streaming on Unity Catalog, seeStructured Streaming support.

Important

Access mode in the Clusters API is not supported.

Databricks Runtime version

Databricks Runtime is the set of core components that run on your clusters. All Databricks Runtime versions include Apache Spark and add components and updates that improve usability, performance, and security. For details, seeDatabricks runtimes.

You select the cluster’s runtime and version using theDatabricks Runtime Versiondropdown when you create or edit a cluster.

Photon acceleration

Photonis available for clusters runningDatabricks Runtime 9.1 LTSand above.

To enable Photon acceleration, select theUse Photon Accelerationcheckbox.

If desired, you can specify the instance type in the Worker Type and Driver Type drop-down.

Cluster node type

A cluster consists of one driver node and zero or more worker nodes. You can pick separate cloud provider instance types for the driver and worker nodes, although by default the driver node uses the same instance type as the worker node. Different families of instance types fit different use cases, such as memory-intensive or compute-intensive workloads.

Driver node

The driver node maintains state information of all notebooks attached to the cluster. The driver node also maintains the SparkContext, interprets all the commands you run from a notebook or a library on the cluster, and runs the Apache Spark master that coordinates with the Spark executors.

司機節點類型的默認值是same as the worker node type. You can choose a larger driver node type with more memory if you are planning tocollect()a lot of data from Spark workers and analyze them in the notebook.

Tip

Since the driver node maintains all of the state information of the notebooks attached, make sure to detach unused notebooks from the driver node.

Worker node

Databricks worker nodes run the Spark executors and other services required for proper functioning clusters. When you distribute your workload with Spark, all the distributed processing happens on worker nodes. Databricks runs one executor per worker node. Therefore, the terms executor and worker are used interchangeably in the context of the Databricks architecture.

Tip

To run a Spark job, you need at least one worker node. If a cluster has zero workers, you can run non-Spark commands on the driver node, but Spark commands will fail.

Worker node IP addresses

Databricks launches worker nodes with two private IP addresses each. The node’s primary private IP address hosts Databricks internal traffic. The secondary private IP address is used by the Spark container for intra-cluster communication. This model allows Databricks to provide isolation between multiple clusters in the same workspace.

GPU instance types

For computationally challenging tasks that demand high performance, like those associated with deep learning, Databricks supports clusters accelerated with graphics processing units (GPUs). For more information, seeGPU-enabled clusters.

AWS Graviton instance types

Databricks supports clusters with AWS Graviton processors. Arm-based AWS Graviton instances are designed by AWS to deliver better price performance over comparable current generation x86-based instances. SeeAWS Graviton-enabled clusters.

Cluster size and autoscaling

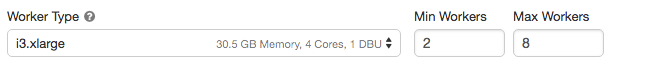

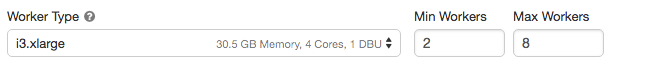

When you create a Databricks cluster, you can either provide a fixed number of workers for the cluster or provide a minimum and maximum number of workers for the cluster.

When you provide a fixed size cluster, Databricks ensures that your cluster has the specified number of workers. When you provide a range for the number of workers, Databricks chooses the appropriate number of workers required to run your job. This is referred to asautoscaling.

自動定量,磚動態ally reallocates workers to account for the characteristics of your job. Certain parts of your pipeline may be more computationally demanding than others, and Databricks automatically adds additional workers during these phases of your job (and removes them when they’re no longer needed).

Autoscaling makes it easier to achieve high cluster utilization, because you don’t need to provision the cluster to match a workload. This applies especially to workloads whose requirements change over time (like exploring a dataset during the course of a day), but it can also apply to a one-time shorter workload whose provisioning requirements are unknown. Autoscaling thus offers two advantages:

Workloads can run faster compared to a constant-sized under-provisioned cluster.

自動定量集群可以減少整體costs compared to a statically-sized cluster.

Depending on the constant size of the cluster and the workload, autoscaling gives you one or both of these benefits at the same time. The cluster size can go below the minimum number of workers selected when the cloud provider terminates instances. In this case, Databricks continuously retries to re-provision instances in order to maintain the minimum number of workers.

Note

Autoscaling is not available forspark-submitjobs.

Note

Compute auto-scaling has limitations scaling down cluster size for Structured Streaming workloads. Databricks recommends using Delta Live Tables with Enhanced Autoscaling for streaming workloads. SeeWhat is Enhanced Autoscaling?.

How autoscaling behaves

Scales up from min to max in 2 steps.

Can scale down, even if the cluster is not idle, by looking at shuffle file state.

Scales down based on a percentage of current nodes.

On job clusters, scales down if the cluster is underutilized over the last 40 seconds.

On all-purpose clusters, scales down if the cluster is underutilized over the last 150 seconds.

The

spark.databricks.aggressiveWindowDownSSpark configuration property specifies in seconds how often a cluster makes down-scaling decisions. Increasing the value causes a cluster to scale down more slowly. The maximum value is 600.

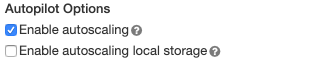

Enable and configure autoscaling

To allow Databricks to resize your cluster automatically, you enable autoscaling for the cluster and provide the min and max range of workers.

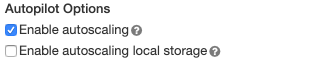

Enable autoscaling.

All-Purpose cluster - On the cluster creation and edit page, select theEnable autoscalingcheckbox in theAutopilot Optionsbox:

Job cluster - On the cluster creation and edit page, select theEnable autoscalingcheckbox in theAutopilot Optionsbox:

Configure the min and max workers.

When the cluster is running, the cluster detail page displays the number of allocated workers. You can compare number of allocated workers with the worker configuration and make adjustments as needed.

Important

If you are using aninstance pool:

Make sure the cluster size requested is less than or equal to theminimum number of idle instancesin the pool. If it is larger, cluster startup time will be equivalent to a cluster that doesn’t use a pool.

Make sure the maximum cluster size is less than or equal to themaximum capacityof the pool. If it is larger, the cluster creation will fail.

Autoscaling example

If you reconfigure a static cluster to be an autoscaling cluster, Databricks immediately resizes the cluster within the minimum and maximum bounds and then starts autoscaling. As an example, the following table demonstrates what happens to clusters with a certain initial size if you reconfigure a cluster to autoscale between 5 and 10 nodes.

Initial size |

Size after reconfiguration |

|---|---|

6 |

6 |

12 |

10 |

3 |

5 |

Instance profiles

To securely access AWS resources without using AWS keys, you can launch Databricks clusters with instance profiles. SeeConfigure S3 access with instance profilesfor information about how to create and configure instance profiles. Once you have created an instance profile, you select it in theInstance Profiledrop-down list.

Note

Once a cluster launches with an instance profile, anyone who has attach permissions to this cluster can access the underlying resources controlled by this role. To guard against unwanted access, you can useCluster access controlto restrict permissions to the cluster.

Autoscaling local storage

If you don’t want to allocate a fixed number of EBS volumes at cluster creation time, use autoscaling local storage. With autoscaling local storage, Databricks monitors the amount of free disk space available on your cluster’s Spark workers. If a worker begins to run too low on disk, Databricks automatically attaches a new EBS volume to the worker before it runs out of disk space. EBS volumes are attached up to a limit of 5 TB of total disk space per instance (including the instance’s local storage).

To configure autoscaling storage, selectEnable autoscaling local storage.

The EBS volumes attached to an instance are detached only when the instance is returned to AWS. That is, EBS volumes are never detached from an instance as long as it is part of a running cluster. To scale down EBS usage, Databricks recommends using this feature in a cluster configured withAWS Graviton instance typesorAutomatic termination.

Note

Databricks uses Throughput Optimized HDD (st1) to extend the local storage of an instance. Thedefault AWS capacity limitfor these volumes is 20 TiB. To avoid hitting this limit, administrators should request an increase in this limit based on their usage requirements.

Local disk encryption

Preview

This feature is inPublic Preview.

Some instance types you use to run clusters may have locally attached disks. Databricks may store shuffle data or ephemeral data on these locally attached disks. To ensure that all data at rest is encrypted for all storage types, including shuffle data that is stored temporarily on your cluster’s local disks, you can enable local disk encryption.

Important

Your workloads may run more slowly because of the performance impact of reading and writing encrypted data to and from local volumes.

When local disk encryption is enabled, Databricks generates an encryption key locally that is unique to each cluster node and is used to encrypt all data stored on local disks. The scope of the key is local to each cluster node and is destroyed along with the cluster node itself. During its lifetime, the key resides in memory for encryption and decryption and is stored encrypted on the disk.

To enable local disk encryption, you must use theClusters API 2.0. During cluster creation or edit, set:

{"enable_local_disk_encryption":true}

SeeCreateandEditin the Clusters API reference for examples of how to invoke these APIs.

Here is an example of a cluster create call that enables local disk encryption:

{"cluster_name":"my-cluster","spark_version":"7.3.x-scala2.12","node_type_id":"r3.xlarge","enable_local_disk_encryption":true,"spark_conf":{"spark.speculation":true},“num_workers”:25}

Cluster tags

Cluster tags allow you to easily monitor the cost of cloud resources used by various groups in your organization. You can specify tags as key-value pairs when you create a cluster, and Databricks applies these tags to cloud resources like VMs and disk volumes, as well asDBU usage reports.

For clusters launched from pools, the custom cluster tags are only applied to DBU usage reports and do not propagate to cloud resources.

For detailed information about how pool and cluster tag types work together, seeMonitor usage using cluster and pool tags.

To configure cluster tags:

In theTagssection, add a key-value pair for each custom tag.

ClickAdd.

AWS configurations

When you configure a cluster’s AWS instance you can choose the availability zone, the max spot price, and EBS volume type. These settings are under theAdvanced Optionstoggle in theInstancestab.

Availability zones

This setting lets you specify which availability zone (AZ) you want the cluster to use. By default, this setting is set toauto, where the AZ is automatically selected based on available IPs in the workspace subnets. Auto-AZ retries in other availability zones if AWS returns insufficient capacity errors.

Choosing a specific AZ for a cluster is useful primarily if your organization has purchased reserved instances in specific availability zones. Read more aboutAWS availability zones.

Spot instances

You can specify whether to use spot instances and the max spot price to use when launching spot instances as a percentage of the corresponding on-demand price. By default, the max price is 100% of the on-demand price. SeeAWS spot pricing.

EBS volumes

This section describes the default EBS volume settings for worker nodes, how to add shuffle volumes, and how to configure a cluster so that Databricks automatically allocates EBS volumes.

To configure EBS volumes, click theInstancestab in the cluster configuration and select an option in theEBS Volume Typedropdown list.

Default EBS volumes

Databricks provisions EBS volumes for every worker node as follows:

A 30 GB encrypted EBS instance root volume used by the host operating system and Databricks internal services.

A 150 GB encrypted EBS container root volume used by the Spark worker. This hosts Spark services and logs.

(HIPAA only) a 75 GB encrypted EBS worker log volume that stores logs for Databricks internal services.

Add EBS shuffle volumes

To add shuffle volumes, selectGeneral Purpose SSDin theEBS Volume Typedropdown list.

By default, Spark shuffle outputs go to the instance local disk. For instance types that do not have a local disk, or if you want to increase your Spark shuffle storage space, you can specify additional EBS volumes. This is particularly useful to prevent out of disk space errors when you run Spark jobs that produce large shuffle outputs.

Databricks encrypts these EBS volumes for both on-demand and spot instances. Read more aboutAWS EBS volumes.

Optionally encrypt Databricks EBS volumes with a customer-managed key

Optionally, you can encrypt cluster EBS volumes with a customer-managed key.

AWS EBS limits

Ensure that your AWS EBS limits are high enough to satisfy the runtime requirements for all workers in all clusters. For information on the default EBS limits and how to change them, see我azon Elastic Block Store (EBS) Limits.

AWS EBS SSD volume type

You can select either gp2 or gp3 for your AWS EBS SSD volume type. To do this, seeManage SSD storage. Databricks recommends you switch to gp3 for its cost savings compared to gp2. For technical information about gp2 and gp3, see我azon EBS volume types.

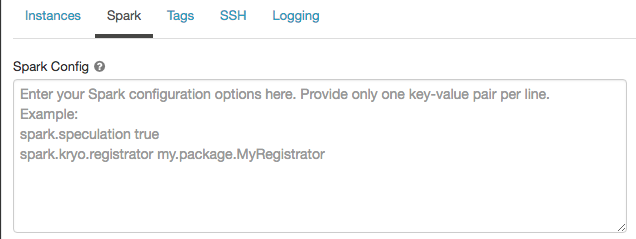

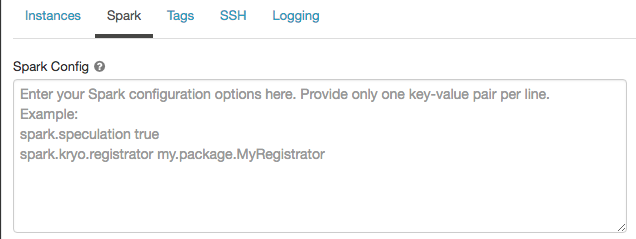

Spark configuration

微調刺激就業,你可以提供自定義的Spark configuration propertiesin a cluster configuration.

On the cluster configuration page, click theAdvanced Optionstoggle.

Click theSparktab.

InSpark config, enter the configuration properties as one key-value pair per line.

When you configure a cluster using theClusters API 2.0, set Spark properties in thespark_conffield in theCreate cluster requestorEdit cluster request.

To set Spark properties for all clusters, create aglobal init script:

dbutils.fs.put("dbfs:/databricks/init/set_spark_params.sh","""|#!/bin/bash||cat << 'EOF' > /databricks/driver/conf/00-custom-spark-driver-defaults.conf|[driver] {| "spark.sql.sources.partitionOverwriteMode" = "DYNAMIC"|}|EOF""".stripMargin,true)

Retrieve a Spark configuration property from a secret

Databricks recommends storing sensitive information, such as passwords, in asecretinstead of plaintext. To reference a secret in the Spark configuration, use the following syntax:

spark. {{secrets//}}

For example, to set a Spark configuration property calledpasswordto the value of the secret stored insecrets/acme_app/password:

spark.password {{secrets/acme-app/password}}

For more information, seeSyntax for referencing secrets in a Spark configuration property or environment variable.

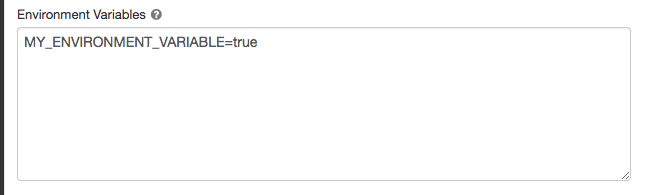

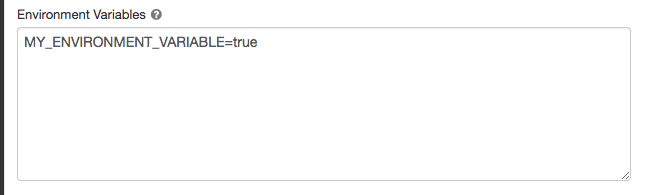

Environment variables

You can configure custom environment variables that you can access frominit scriptsrunning on a cluster. Databricks also provides predefinedenvironment variablesthat you can use in init scripts. You cannot override these predefined environment variables.

On the cluster configuration page, click theAdvanced Optionstoggle.

Click theSparktab.

Set the environment variables in theEnvironment Variablesfield.

You can also set environment variables using thespark_env_varsfield in theCreate cluster requestorEdit cluster requestClusters API endpoints.

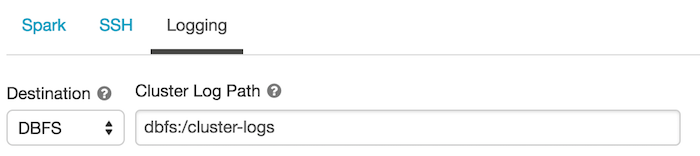

Cluster log delivery

When you create a cluster, you can specify a location to deliver the logs for the Spark driver node, worker nodes, and events. Logs are delivered every five minutes to your chosen destination. When a cluster is terminated, Databricks guarantees to deliver all logs generated up until the cluster was terminated.

The destination of the logs depends on the cluster ID. If the specified destination isdbfs:/cluster-log-delivery, cluster logs for0630-191345-leap375are delivered todbfs:/cluster-log-delivery/0630-191345-leap375.

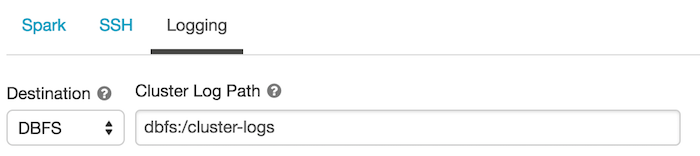

To configure the log delivery location:

On the cluster configuration page, click theAdvanced Optionstoggle.

Click theLoggingtab.

Select a destination type.

Enter the cluster log path.

S3 bucket destinations

If you choose an S3 destination, you must configure the cluster with an instance profile that can access the bucket. This instance profile must have both thePutObjectandPutObjectAclpermissions. An example instance profile has been included for your convenience. SeeConfigure S3 access with instance profilesfor instructions on how to set up an instance profile.

{"Version":"2012-10-17","Statement":[{"Effect":"Allow","Action":["s3:ListBucket"],"Resource":["arn:aws:s3:::" ]},{"Effect":"Allow","Action":["s3:PutObject","s3:PutObjectAcl","s3:GetObject","s3:DeleteObject"],"Resource":["arn:aws:s3:::/*" ]}]}

Note

This feature is also available in the REST API. SeeClusters API 2.0andCluster log delivery examples.